Working with a huge amount of geographic data is not an easy task. We decided to split the job with cluster technology. By using Docker, we were able to process petabytes of data or serve self-hosted world maps in 10 minutes. Our solution, based on Docker technology, made it to the DockerCon conference.

Self-hosted world maps

When a company is considering using a map, the first option taken into consideration is mostly one of the big free map providers. This is what most people know and what they consider as free: in fact, it is always paid — either by the user’s personal data or, when it comes to extensive usage by an organization, by a significant amount of money. They are billed for using maps offline, behind the firewall, asset tracking, or simply if the number of visitors jumped higher. All those limitations can be avoided with self-hosted maps.

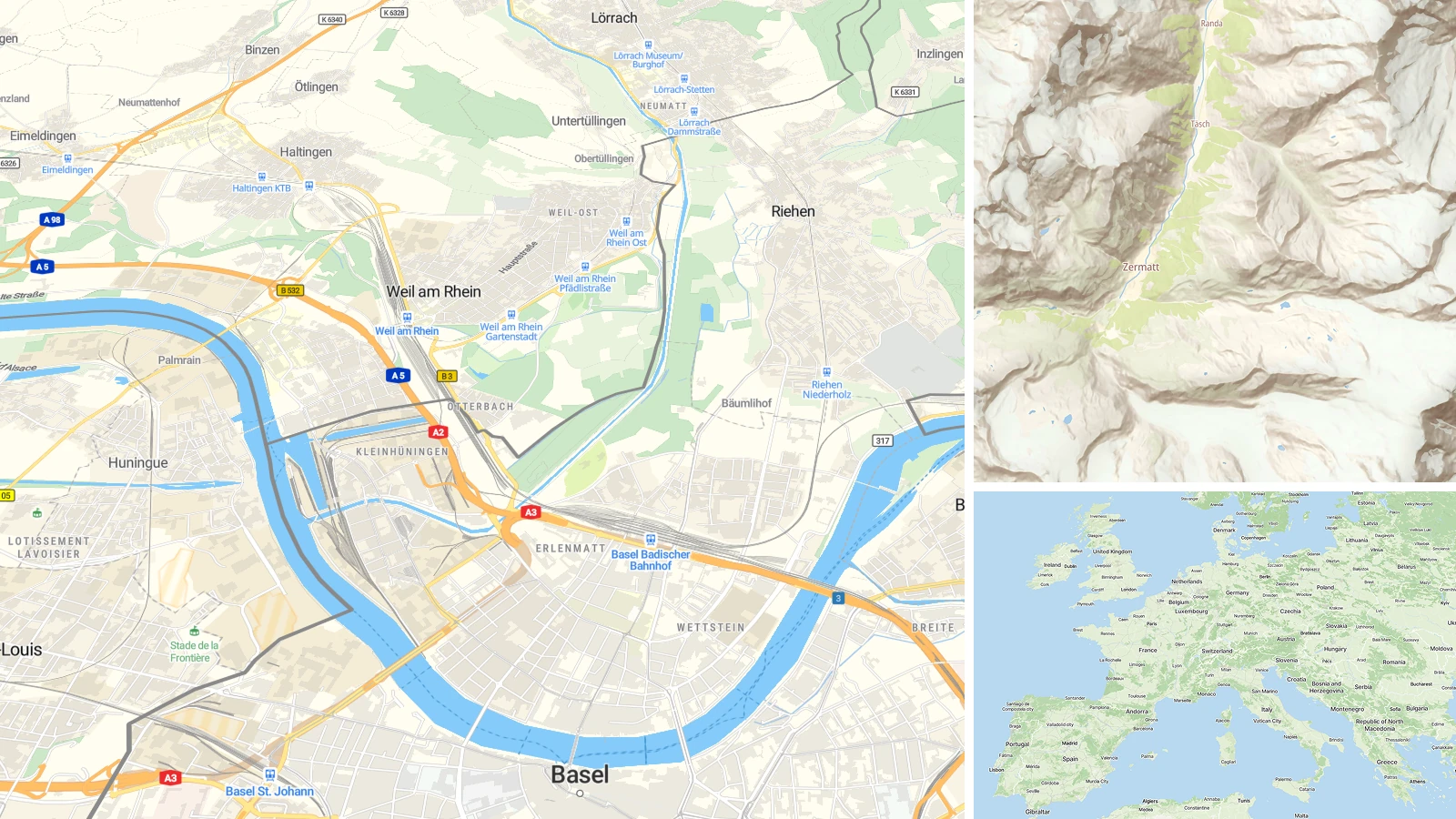

In OpenMapTiles, we offer you world maps based on OpenStreetMap. This collaborative mapping project includes all important infrastructure for a base map like streets, houses, roads, landuse data, points of interest, and much more. On top of this, the paid customers also get access to digital elevation model and contour lines for building outdoor maps and satellite imagery of the whole world. You can also add your own geodata and use the same map style to customize its look.

OpenMapTiles Server, which enables you to run all of this from your own infrastructure, is using Docker container technology. The hardware requirements are so low that you can run it even on your laptop. There is also no need to be connected to the internet, therefore the maps can be used offline or behind the firewall. The OpenMapTiles project is open-source, the community-driven repository can be found on GitHub.

Petr Pridal and Martin Mikita from the MapTiler team presenting about hosting maps from own infrastructure at DockerCon 2017

World map in 10 minutes using Docker

The OpenMapTiles Server is available in Docker hub or, if you already have Docker installed, by launching the container from Kitematic or running this command:

docker run --rm -it -v $(pwd):/data -p 8080:80 klokantech/openmaptiles-serverThen visit the webpage http://localhost:8080/ in your browser. You will be guided through the short wizard, where you select if you want to serve the whole planet, a country, or a city, which of the prepared map styles you want to use or if you want to use your own default language for the visitors, what kind of services you want to run (raster tiles, vector tiles, WMTS, WMS or static maps).

As said before, the hardware requirements are really low: pre-generated vector tiles of the whole world have just some 50 GB. Therefore, the minimal requirement is set to 60 GB hard drive space and 8 GB RAM. If you want to have raster tiles, you will need more space, as raster images occupy more space. Serving pre-rendered tiles has an advantage in terms of lower hardware requirements compared to serving tiles on demand, where you need to run your own PostgreSQL, Nginx, and others. Also, the configuration is significantly easier, and setting up the server is a task that requires a person with basic IT knowledge.

Map of the entire world can run on a computer with 60 GB hard drive space and 8 GB RAM.

The solution based on the OpenMapTiles Server is fully scalable (horizontally + vertically); the scaling could be done dynamically based on the workload. Scaling requires Map Server, Memserver and volume attached, which enables multiplying the machines. You can achieve the same result using Swarm / Kubernetes.

Short graphical wizard where you select which services you want to run

Processing geospatial Big Data on a Docker cluster

The whole technique of processing big geodata can be split on a cluster using Docker container technology.

Our solution starts by dividing the whole work into smaller jobs and sending them to separate machines. Each of them is constantly reporting metrics and logs to the master server. Once the job is marked as done, finished work is sent to the output storage, and the control server sends another work, and the whole process starts again. The input and output storage can be anything from own server to cloud services like Amazon S3 or Google Cloud Storage.

With the process described above, we were able to render the whole world OpenStreetMap data with the OpenMapTiles project (126 million tiles) in one day using 32 machines, each equipped with 4 cores. This job will otherwise take ~128 days of CPU time. The same technique was used for rendering raster data using our MapTiler Engine. By using a cluster, we were able to convert 60 TB USA aerial imagery, create OMT Satellite, or process petabytes of satellite data for clients.

Want to learn more?

If you want to learn more, check the video from our presentation, which is available on YouTube. Do not forget to view the slides on Slideshare.